RESEARCH LINES

In our research team, curiosity never runs out. Together, we are building the future and setting the course for a new era of limitless possibilities. Each of us brings a unique perspective, special skill and passion to this team. We complement and challenge each other constantly to reach new heights. No matter how much we’ve accomplished so far, we always know there’s more to discover, explore, and create. AI is an ever-evolving field, and we’re here to lead the way.

RL1 / Deep Learning for Vision and Language

New theories and methods to continue unraveling the potential of Deep Learning to create advanced cognitive systems with a focus on vision and language.

RL2 / Neuro-symbolic AI

Integration of logical-probabilistic and deep learning-based AI, mutually invoking each other’s solutions, injecting and using semantics in deep learning

RL3 / Brain-inspired AI

Bringing together scientists from neuroscience, cognitive psychology, and AI to exploit insights from the anatomical and cognitive operations of biological brains to enlighten AI researchers.

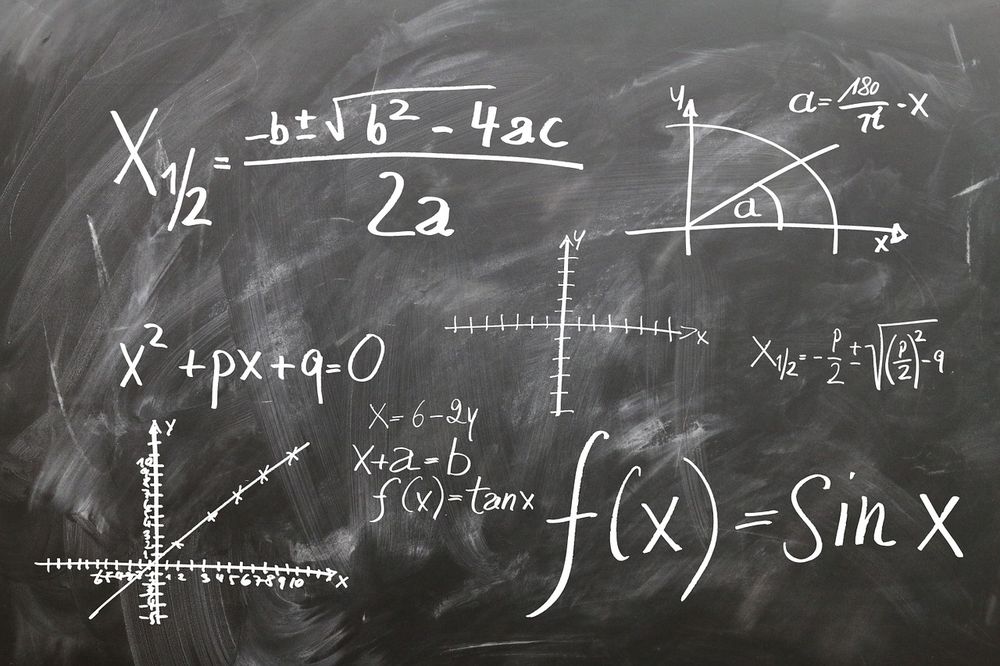

RL4 / Physics-based machine learning

Bring together mathematicians, physicists, and AI scientists to exploit insights from the physical sciences to develop machine learning models based on causal relationships.

RL5 / Human-centric AI

New technologies for a fair, safe and transparent use of AI in society, as well as methodologies to assess its impact on it. Promote new tools for interpretable and explainable AI.

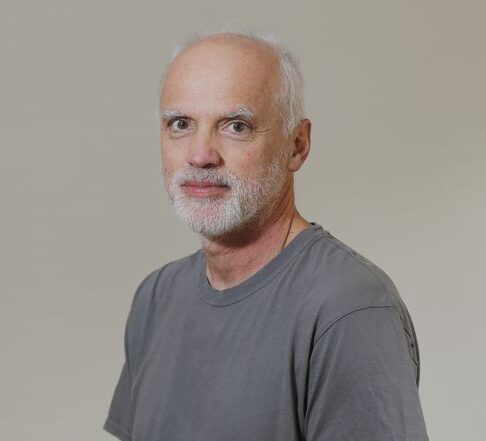

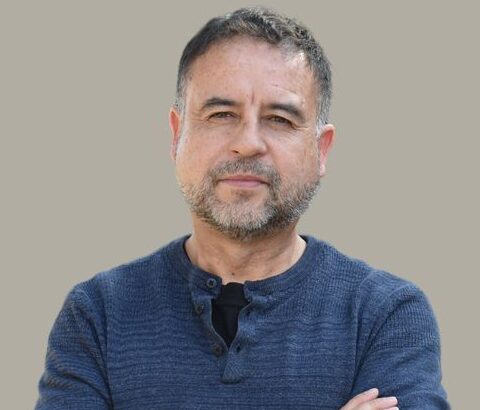

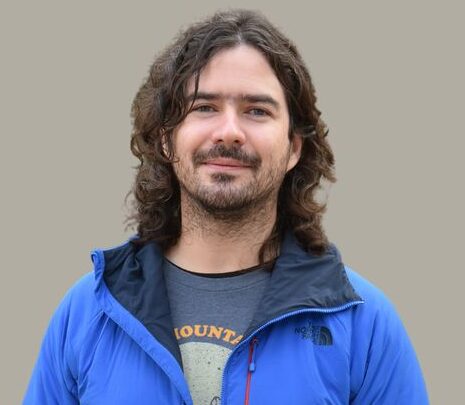

Main researchers

CENIA`s researchers

In addition to conducting cutting-edge research, our team of permanent researchers also actively participates in technology transfer projects, working closely with companies, organizations and other institutions to translate our knowledge into practical and viable solutions. They also participate in conferences, workshops and academic events to keep up to date with the latest advances in the field of artificial intelligence.

Cenia Scientific Committee

Research associates

Young researchers

Postdocs

Associated collaborators

International Collaborating

ABSTRACT

Continuous learning occurs naturally in human beings. However, Deep Learning methods suffer from a problem known as Catastrophic Forgetting (CF) that consists of a model drastically decreasing its performance on previously learned tasks when it is sequentially trained on new tasks. This situation, known as task interference, occurs when a network modifies relevant weight values as it learns a new task. In this work, we propose two main strategies to face the problem of task interference in convolutional neural networks. First, we use a sparse coding technique to adaptively allocate model capacity to different tasks avoiding interference between them. Specifically, we use a strategy based on group sparse regularization to specialize groups of parameters to learn each task. Afterward, by adding binary masks, we can freeze these groups of parameters, using the rest of the network to learn new tasks. Second, we use a meta learning technique to foster knowledge transfer among tasks, encouraging weight reusability instead of overwriting. Specifically, we use an optimization strategy based on episodic training to foster learning weights that are expected to be useful to solve future tasks. Together, these two strategies help us to avoid interference by preserving compatibility with previous and future weight values. Using this approach, we achieve state-of-the-art results on popular benchmarks used to test techniques to avoid CF. In particular, we conduct an ablation study to identify the contribution of each component of the proposed method, demonstrating its ability to avoid retroactive interference with previous tasks and to promote knowledge transfer to future tasks.

ABSTRACT

Every year physicians face an increasing demand of image-based diagnosis from patients, a problem that can be addressed with recent artificial intelligence methods. In this context, we survey works in the area of automatic report generation from medical images, with emphasis on methods using deep neural networks, with respect to: (1) Datasets, (2) Architecture Design, (3) Explainability and (4) Evaluation Metrics. Our survey identifies interesting developments, but also remaining challenges. Among them, the current evaluation of generated reports is especially weak, since it mostly relies on traditional Natural Language Processing (NLP) metrics, which do not accurately capture medical correctness.

ABSTRACT

This article describes the participation and results of the PUC Chile team in the Turberculosis task in the context of ImageCLEFmedical challenge 2021. We were ranked 7th based on the kappa metric and 4th in terms of accuracy. We describe three approaches we tried in order to address the task. Our best approach used 2D images visually encoded with a DenseNet neural network, which representations were concatenated to finally output the classification with a softmax layer. We describe in detail this and other two approaches, and we conclude by discussing some ideas for future work.

Publisher: Logical Methods in Computer Science, Link>

ABSTRACT

We investigate the application of the Shapley value to quantifying the contribution of a tuple to a query answer. The Shapley value is a widely known numerical measure in cooperative game theory and in many applications of game theory for assessing the contribution of a player to a coalition game. It has been established already in the 1950s, and is theoretically justified by being the very single wealth-distribution measure that satisfies some natural axioms. While this value has been investigated in several areas, it received little attention in data management. We study this measure in the context of conjunctive and aggregate queries by defining corresponding coalition games. We provide algorithmic and complexity-theoretic results on the computation of Shapley-based contributions to query answers; and for the hard cases we present approximation algorithms.

ABSTRACT

Word embeddings are vital descriptors of words in unigram representations of documents for many tasks in natural language processing and information retrieval. The representation of queries has been one of the most critical challenges in this area because it consists of a few terms and has little descriptive capacity. Strategies such as average word embeddings can enrich the queries’ descriptive capacity since they favor the identification of related terms from the continuous vector representations that characterize these approaches. We propose a data-driven strategy to combine word embeddings. We use Idf combinations of embeddings to represent queries, showing that these representations outperform the average word embeddings recently proposed in the literature. Experimental results on benchmark data show that our proposal performs well, suggesting that data-driven combinations of word embeddings are a promising line of research in ad-hoc information retrieval.

ABSTRACT

We present a study of an artificial neural architecture that predict human ocular scanpaths while they are free-viewing different images types. This analysis is made by comparing different metrics that encompass scanpath patterns, these metrics aim to measure spatial and temporal errors; such as the MSE, ScanMatch, cross-correlogram peaks, and MultiMatch. Our methodology begin by choosing one architecture and training different parametric models per subject and image type, this allows to adjust the models to each person and a given set of images. We find out that there is a clear difference in prediction when people free-view images with high visual content (high-frequency contents) and low visual content (no-frequency contents). The input features selected for predicting the scanpath are saliency maps calculated from foveated images together with the past of the ocular scanpath of subjects, modeled by our architecture called FovSOS-FSD (Foveated Saliency and Ocular Scanpath with Feature Selection and Direct Prediction).

The results of this study could be used to improve the design of gaze-controlled interfaces, virtual reality, as well as to better understand how humans visually explore their surroundings and pave a way to make future research.

Embeddings are core components of modern model-based Collaborative Filtering (CF) methods, such as Matrix Factorization (MF) and Deep Learning variations. In essence, embeddings are mappings of the original sparse representation of categorical features (eg, user and items) to dense low-dimensional representations. A well-known limitation of such methods is that the learned embeddings are opaque and hard to explain to the users. On the other hand, a key feature of simpler KNN-based CF models (aka user/item-based CF) is that they naturally yield similarity-based explanations, ie, similar users/items as evidence to support model recommendations. Unlike related works that try to attribute explicit meaning (via metadata) to the learned embeddings, in this paper, we propose to equip the learned embeddings of MF with meaningful similarity-based explanations. First, we show that the learned user/item …